Elastic Support Hub moves to semantic search

We’re excited to share a recent enhancement made to the Elastic Support Hub: it’s now powered by semantic search!

But before we go into more detail on the changes we made to the Elastic® Support Hub and its impact on our customers, it's important that we take a moment to explain the concept of semantic search. At its core, semantic search is a method of search that uses AI to return more relevant search results. Take a look at this quick video explaining the concept:

As shown in the video, semantic search matches the intent of what the user searches to the content available rather than the words. You can read more about the AI behind it on our blog, Introducing Elastic Learned Sparse Encoder: Elastic’s AI model for semantic search. The rest of this blog tells our story about moving the Elastic Support Hub to semantic search.

Why did we make this change?

All technology news these days seems to have something to do with large language models and generative AI. Elastic is leading the charge with its vector database capabilities and built-in natural language models. It makes sense that we should build our supporting applications on the same bleeding edge that our product lives on. By making this change now, we can provide feedback to our product development teams and make the product better for everyone.

Biggest takeaway configuring semantic search

As with most new technology innovations, it requires tearing down, replacing older code, and potentially updating underlying architecture. Our internal app development team faced these challenges head-on, and we are now in a much better position to iterate on any of Elasticsearch®’s new features. From our teams' point of view, there were two significant features that stood out in the setup process:

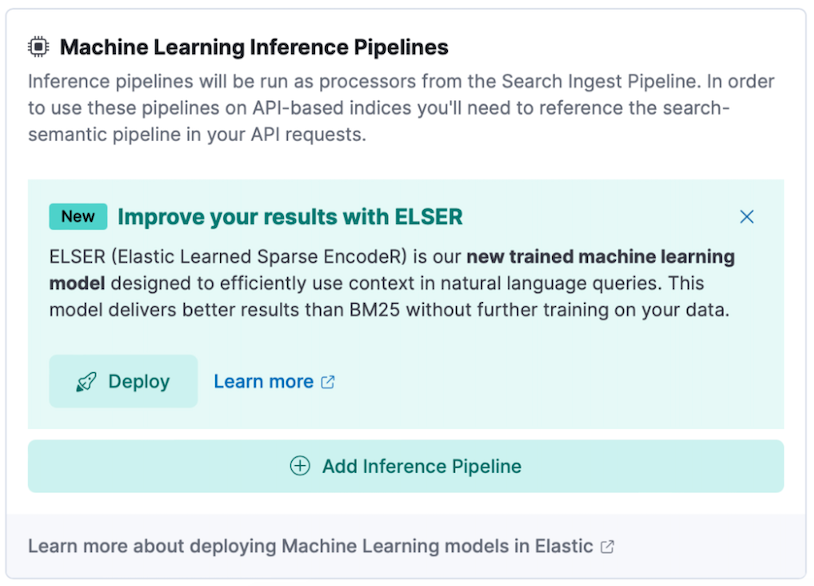

1. Considering ELSER, Elastic’s proprietary transformer model for semantic search, is a relatively new feature in Elasticsearch (8.8), our development team was happy to see a guided UI experience to enable Elasticsearch ingest pipelines with ELSER.

This allowed our developers to quickly add the necessary text expansion configuration to the ingestion pipeline that makes semantic search possible. This made the configuration experience much easier to get started and see results quicker.

2. A machine learning model like ELSER takes dedicated machine resources to run (minimum 4GB). Since we were already running on Elastic Cloud, we were able to enable dedicated machine learning (ML) nodes with autoscaling to accommodate our resource demands and see more consistent performance.

Early evaluation of search results

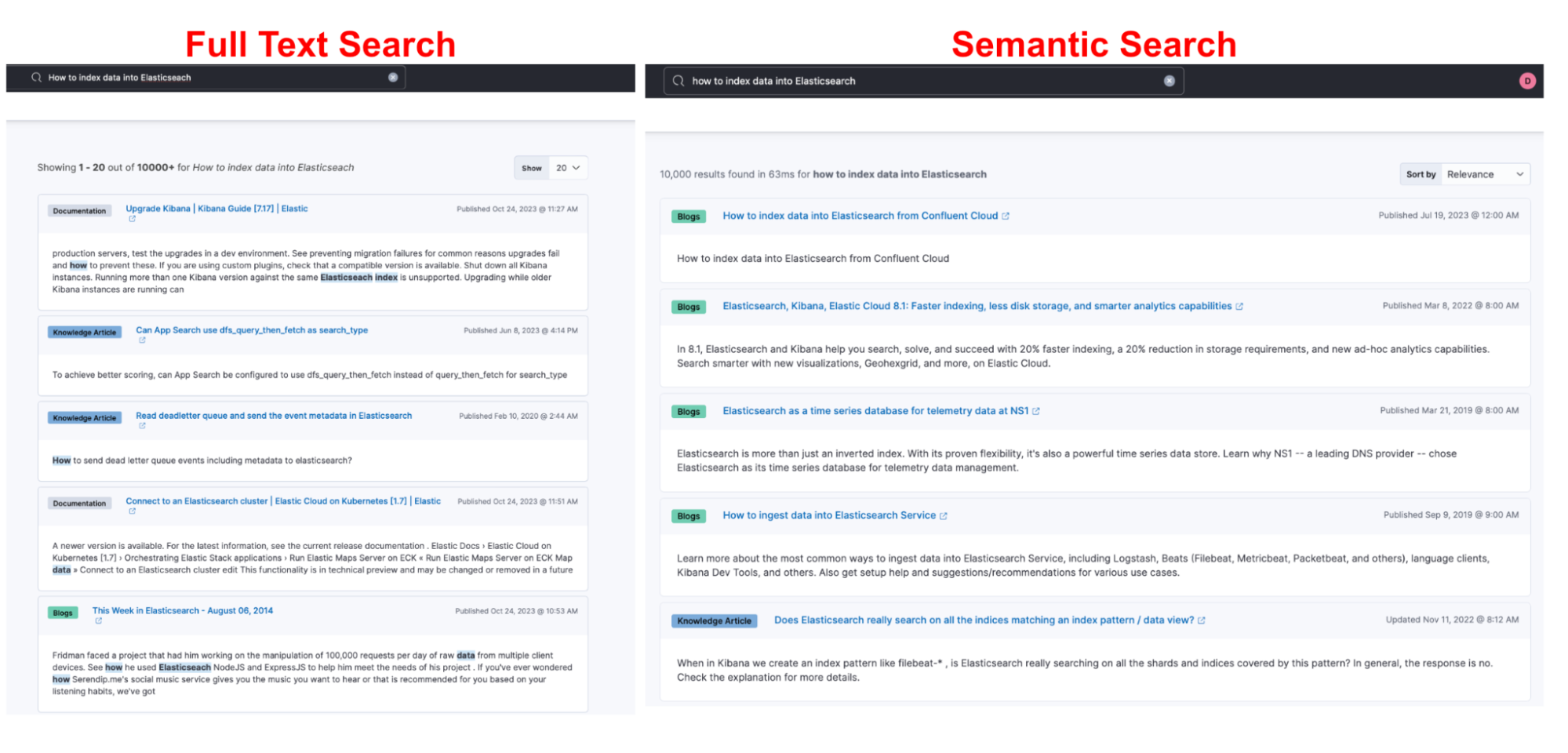

We are enabling various systems to help us to understand user queries, search results, and relevancy at scale. However, in our user testing, we can already see significant improvements in various queries. For example, we tested the phrase “How to index data into Elasticsearch” on both our standard full-text search and our new semantic search implementations.

Here is a side-by-side comparison of the two search methods.

While there isn’t a single article that explains all the ways you can index data (there are a lot), you can see how fundamentally different these results are. For full-text search, we have a mix of guides, troubleshooting articles, and a blog with matched keywords, but none of them answer the question of “how.” Or to say it differently, text search didn't capture the meaning (semantically) of the query and did its best to match keywords.

For the semantic search results, you can see blogs that generally relate to the indexing of data. What is even more interesting is the fourth returned result of “How to ingest data into Elasticsearch Service” as the term ingest is actually more relevant to the process of adding data to an index. Elastic’s out-of-the-box transformer model picked up on the semantic meaning of adding data to an index and returned more relevant results regardless of the exact keywords.

What’s next?

While we see this as a gigantic leap forward in our ability to provide customers with relevant search results, we know our work is not done. Over time, we will evaluate the data we have on terms searched, results, and articles read. This data will allow us to add synonyms and configure appropriate weights and boosts to give you, our customers, the best experience when searching for Elastic content on support.elastic.co.

The release and timing of any features or functionality described in this post remain at Elastic's sole discretion. Any features or functionality not currently available may not be delivered on time or at all.

In this blog post, we may have used or referred to third party generative AI tools, which are owned and operated by their respective owners. Elastic does not have any control over the third party tools and we have no responsibility or liability for their content, operation or use, nor for any loss or damage that may arise from your use of such tools. Please exercise caution when using AI tools with personal, sensitive or confidential information. Any data you submit may be used for AI training or other purposes. There is no guarantee that information you provide will be kept secure or confidential. You should familiarize yourself with the privacy practices and terms of use of any generative AI tools prior to use.

Elastic, Elasticsearch, ESRE, Elasticsearch Relevance Engine and associated marks are trademarks, logos or registered trademarks of Elasticsearch N.V. in the United States and other countries. All other company and product names are trademarks, logos or registered trademarks of their respective owners.