What is generative AI?

Generative AI defintion

Explanation for a student (basic):

Generative AI is a technology that can create new and original content like art, music, software code, and writing. When users enter a prompt, artificial intelligence generates responses based on what it has learned from existing examples on the internet, often producing unique and creative results.

An explanation for a developer (technical):

Generative AI is a branch of artificial intelligence centered around computer models capable of generating original content. By leveraging the power of large language models, neural networks, and machine learning, generative AI is able to produce novel content that mimics human creativity. These models are trained using large datasets and deep-learning algorithms that learn the underlying structures, relationships, and patterns present in the data. The results are new and unique outputs based on input prompts, including images, video, code, music, design, translation, question answering, and text.

How does generative artificial intelligence work?

Generative AI models work by using neural networks inspired by the neurons in the human brain to learn patterns and features from existing data. These models can then generate new data that aligns with the patterns they've learned. For example, a generative AI model trained on a set of images can create new images that look similar to the ones it was trained on. It's similar to how language models can generate expansive text based on words provided for context.

Generative AI leverages advanced techniques like generative adversarial networks (GANs), large language models, variational autoencoder models (VAEs), and transformers to create content across a dynamic range of domains. More detail about these approaches is explained below.

Learning from large datasets, these models can refine their outputs through iterative training processes. The model analyzes the relationships within given data, effectively gaining knowledge from the provided examples. By adjusting their parameters and minimizing the difference between desired and generated outputs, generative AI models can continually improve their ability to generate high-quality, contextually relevant content. The results, whether it's a whimsical poem or a chatbot customer support response, can often be indistinguishable from human-generated content.

From a user perspective, generative AI often starts with an initial prompt to guide content generation, followed by an iterative back-and-forth process exploring and refining variations.

Watch this video and take a deeper dive into how generative AI works. Learn what to consider as you explore implementing it for your business or organization.

Types of generative AI models

Generative AI employs various models to enable the creation of new and original content. Some of the most common types of generative AI models include:

Generative adversarial networks (GANs): A GAN consists of two key components: a generator and a discriminator. The generator generates synthetic data based on the patterns it has learned from the training data. The discriminator acts as a judge to evaluate the authenticity of that generated data compared to real data and decides if it's real or fake. The training process teaches the generator to produce more realistic outputs while the discriminator improves in distinguishing between genuine and synthetic data. GANs are widely used in image generation and have demonstrated impressive results in creating uncannily realistic visuals.

Variational autoencoders (VAEs): VAEs are neural networks that learn to encode and decode data. An encoder compresses the input data into a lower-dimensional representation called the latent space. Meanwhile, a decoder reconstructs the original data from the latent space. VAEs enable the generation of new data by sampling points in the latent space and decoding them into meaningful outputs. The approach is particularly valuable in image and audio synthesis, where latent representations can be manipulated to produce diverse and creative outputs.

Large language models (LLMs): The most common types of LLMs, like ChatGPT (Generative Pretrained Transformer), are trained on vast volumes of textual data. These sophisticated language models use knowledge from textbooks and websites to social media posts. They leverage transformer architectures to understand and generate coherent text based on given prompts. Transformer models are the most common architecture of large language models. Consisting of an encoder and a decoder, they process data by making a token from given prompts to discover relationships between them.

Essentially, transformer models predict what word comes next in a sequence of words to simulate human speech. LLMs have the ability to engage in realistic conversations, answer questions, and generate creative, human-like responses, making them ideal for language-related applications, from chatbots and content creation to translation.

What are the benefits of generative AI?

Generative AI offers powerful benefits on both an individual and commercial level. And its impact will only grow as the technology evolves. In the short term, one of the most immediate and significant benefits is increased efficiency and streamlined workflows. The ability to automate tasks saves both people and enterprises valuable time, energy, and resources. From drafting emails to making reservations, generative AI is already increasing efficiency and productivity. Here are just a few of the ways generative AI is making a difference:

- Automated content creation allows businesses and individuals to produce high-quality, customized content at scale. This is already having an impact across domains, particularly in advertising, marketing, entertainment, and media production.

- Generative AI can serve as an inspirational tool for artists, designers, writers, architects, and other creators, enabling them to explore fresh possibilities, generate new ideas, and push the boundaries of their creative work. Collaborating with generative AI, creators can achieve once unimaginable levels of productivity, paving the way for more artwork, literature, journalism, architecture, video, music, and fashion.

- Generative AI models can be utilized for problem-solving tasks that require new solutions or ideas, as well as for analyzing data to improve decision-making. For example, in product design, AI-powered systems can generate new prototypes or optimize existing designs based on specific constraints and requirements. The practical applications for research and development are potentially revolutionary. And the ability to summarize complex information in seconds has wide-reaching problem-solving benefits.

- For developers, generative AI can streamline the process of writing, checking, implementing, and optimizing code.

- For consumer-facing businesses, generative AI-driven chatbots and virtual assistants can provide enhanced customer support, reducing response times and the burden on resources.

What are the challenges and limitations of generative AI?

While generative AI holds tremendous potential, it also faces certain challenges and limitations. Some key concerns include:

Data bias: Generative AI models rely on the data they are trained on. If the training data contains biases or limitations, these biases can be reflected in the outputs. Organizations can mitigate these risks by carefully limiting the data their models are trained on, or using customized, specialized models specific to their needs.

Ethical considerations: The ability of generative AI models to create realistic content raises ethical concerns, such as the impact it will have on human society, and its potential for misuse or manipulation. Ensuring the responsible and ethical use of generative AI technology will be an ongoing issue.

Unreliable outputs: Generative AI and LLM models have been known to hallucinate responses, a problem that is exacerbated when a model lacks access to relevant information. This can result in incorrect answers or misleading information being provided to users that sounds factual and confident. And the more realistic sounding the content, the harder it can be to identify inaccurate information.

Domain specificity: A lack of knowledge about domain-specific content is a common limitation of generative AI models like ChatGPT. Models can generate coherent and contextually relevant responses based on the information they are trained on (often public Internet data), but they're often unable to access domain-specific data or provide answers that depend on a unique knowledge base, such as an organization's proprietary software or internal documentation. You can minimize these limitations by providing access to documents and data specific to your domain.

Timeliness: Models are only as fresh as the data that they are trained on. The responses models can provide are based on "moment in time" data that is not real-time data.

Computational requirements: Training and running large generative AI models require significant computational resources, including powerful hardware and extensive memory. These requirements can increase costs and limit accessibility and scalability for certain applications.

Data requirements: Training large generative AI models also requires access to a large corpus of data that can be time intensive and costly to store.

Sourcing concerns: Generational AI models do not always identify the source of content they're drawing from, raising complicated copyright and attribution issues.

Lack of interpretability: Generative AI models often operate as "black boxes," making it particularly challenging to understand their decision-making process. The lack of interpretability can hinder trust and limit adoption in critical applications.

Model deployment and management processes: Choosing the right model requires experimentation and rapid iteration. And deploying Large Language models (LLMs) for generative AI applications is time-consuming and complex with a steep learning curve for many developers.

What are popular generative AI models?

Generative AI models come in various forms, each with unique capabilities and applications — and the amount of generative AI interfaces seem to be multiplying daily. Currently, the most popular and powerful generative AI models include:

ChatGPT

A runaway success since launching publicly in November 2022, ChatGPT is a large language model developed by OpenAI. It uses a conversational chat interface to interact with users and fine-tune outputs. It's designed to understand and generate human-like responses to text prompts, and it has demonstrated an ability to engage in conversational exchanges, answer questions relevantly, and even showcase a sense of humor.

The original ChatGPT-3 release, which is available free to users, was reportedly trained on more than 45 terabytes of text data from across the internet. Microsoft integrated a version of GPT into its Bing search engine soon after. And OpenAI's upgraded, subscription-based ChatGPT-4 launched in March 2023.

ChatGPT uses a cutting-edge transformer architecture. The GPT stands for "Generative Pre-trained Transformer,"" and the transformer architecture has revolutionized the field of natural language processing (NLP).

DALL-E

DALL-E 2, also from OpenAI, is focused on generating images. DALL-E combines a GAN architecture with a variational autoencoder to produce highly detailed and imaginative visual results based on text prompts. With DALL-E, users can describe an image and style they have in mind, and the model will generate it. Along with competitors like MidJourney and newcomer Adobe Firefly, DALL-E and generative AI are revolutionizing the way images are created and edited. And with emerging capabilities across the industry, video, animation, and special effects are set to be similarly transformed.

Google Bard

Originally built on a version of Google's LaMDA family of large language models, then upgraded to the more advanced PaLM 2, Bard is Google's alternative to ChatGPT. Bard functions similarly, with the ability to code, solve math problems, answer questions, and write, as well as provide Google search results.

Generative AI use cases

Though the technology is relatively young and rapidly evolving, generative AI has already established a firm foothold across various applications and industries. By building generative AI-powered user applications, companies can build new customer experiences that drive satisfaction, revenue, and profitability as well as new employee workflows that can improve productivity all while reducing costs and risk. Use cases include:

- AI in technology: Generative AI can help technology organizations enhance customer experience and service with interactive support and knowledge bases, accelerate product research and development by writing code and modeling trials, as well as redefine employee workflows with AI-powered assistants that can help synthesize and extract information quickly.

- AI in government: National and local government agencies are thinking about how generative AI can create more personalized, hyper-relevant public services, more accurate investigations and intelligence analysis, greater employee productivity, streamlined digital experiences for constituents, and more.

- AI in financial services: Banks, insurers, wealth management companies, credit agencies, and other financial institutions can leverage generative AI to build innovative customer experiences that impact top-line revenue such as retail banking assistants, self-service customer chatbots, virtual financial advisors, loan assistants, and more. Generative AI can also improve employee's ability to find relevant information that speeds their workflow. Whether it's fraud detection, risk management, market research or sales and trading, AI-powered assistants can help reduce time spent on manual tasks and accelerate decision-making.

- AI in advertising and marketing: Generative AI provides automated, low-cost content for advertising and marketing campaigns, social media posts, product descriptions, branding materials, marketing emails, personalized recommendations, and many other targeted marketing, upselling, and cross-selling strategies. Producing tailored content based on consumer data and analysis, generative AI can boost customer engagement and conversion rates. It can also help with client segmentation using data to predict the response of a target group to campaigns.

- AI in healthcare: Generative AI models can aid in medical image analysis, disease diagnosis, identifying drug interactions, and accelerating drug discovery, saving time and resources. By generating synthetic medical data, models can help augment limited datasets and improve the accuracy of diagnostic systems.

- AI in automotive and manufacturing: Generative AI provides automotive and manufacturing companies the ability to offer the relevant toolkit to streamline employee operations such as identifying operational technology issues, interactive supply chain management, predictive maintenance with digital twins, or relevant virtual assistants for any line of business. Organizations can also look to develop interactive digital manuals, self-service chatbots, assisted product configurators, and more to improve customer experience and retention.

- AI in art and media: Perhaps more than any other area, generative AI is revolutionizing creative fields. It can assist artists and designers in generating unique work faster, musicians in composing new melodies, game designers in rendering brand-new worlds, and filmmakers in generating visual effects and realistic animations. Film and media companies are also enabled to produce content more economically, with the ability to do things like translate work into different languages using the original actor's voice.

- AI in ecommerce and retail: Generative AI can help make ecommerce more personalized for shoppers by using their purchasing patterns to recommend new products and create a more seamless shopping process. For retailers and ecommerce businesses, AI can create better user experiences from more intuitive browsing to AI-enabled customer service features using chatbots and AI-informed FAQ sections.

What's next for generative AI?

The future of generative AI is full of promise. As technology advances, increasingly sophisticated generative AI models are targeting various global concerns. AI has the potential to rapidly accelerate research for drug discovery and development by generating and testing molecule solutions, speeding up the R&D process. Pfizer used AI to run vaccine trials during the coronavirus pandemic1, for example. AI is also an emerging solution for many environmental challenges. Notably, some AI-enabled robots are already at work assisting ocean-cleaning efforts.

Generative AI is also able to generate hyper-realistic and stunningly original, imaginative content. Content across industries like marketing, entertainment, art, and education will be tailored to individual preferences and requirements, potentially redefining the concept of creative expression. Progress may eventually lead to applications in virtual reality, gaming, and immersive storytelling experiences that are nearly indistinguishable from reality.

In the near term, the impact of generative AI will be felt most directly as advanced capabilities are embedded in tools we use every day, from email platforms and spreadsheet software to search engines, word processors, ecommerce marketplaces, and calendars. Workflows will become more efficient, and repetitive tasks will be automated. Analysts expect to see large productivity and efficiency gains across all sectors of the market.

Organizations will use customized generative AI solutions trained on their own data to improve everything from operations, hiring, and training to supply chains, logistics, branding, and communication. Developers will use it to write perfect code in a fraction of the time. Like many fundamentally transformative technologies that have come before it, generative AI has the potential to impact every aspect of our lives.

Deep dive into 2024 technical search trends. Watch this webinar to learn best practices, emerging methodologies, and how the top trends are influencing developers in 2024.

Powering the generative AI era with Elasticsearch

As more organizations integrate generative AI into their internal and external operations, Elastic designed the Elasticsearch Relevance Engine™ (ESRE) to give developers the tools they need to power artificial intelligence-based search applications. ESRE can improve search relevance and generate embeddings and search vectors at scale while allowing businesses to integrate their own transformer models.

Our relevance engine is tailor-made for developers who build AI-powered search applications, with features including support to integrate third-party transformer models like generative AI and ChatGPT-3 and ChatGPT-4 via APIs. Elastic provides a bridge between proprietary data and generative AI, whereby organizations can provide tailored, business-specific context to generative AI via a context window. This synergy between Elasticsearch and ChatGPT ensures that users receive factual, contextually relevant, and up-to-date answers to their queries.

.png)

The marriage of Elasticsearch’s retrieval prowess and ChatGPT’s natural language understanding capabilities offers an unparalleled user experience, setting a new standard for information retrieval and AI-powered assistance. There are even implications for the future of security, with potentially ambitious applications of ChatGPT for improving detection, response, and understanding.

To learn more about supercharging your search with Elastic and generative AI, sign up for a free demo.

Explore more generative AI resources

- Choosing an LLM: The 2024 getting started guide to open-source LLMs

- AI search algorithms explained

- What is a large language model?

- The role of generative AI in higher ed IT

- Revolutionising Australian government services with generative AI

- Advanced search for the AI revolution

- ChatGPT and Elasticsearch: OpenAI meets private data

- Elastic at Microsoft Build

What you should do next

Whenever you're ready... here are four ways we can help you harness insights from your business’ data:

- Start a free trial and see how Elastic can help your business.

- Tour our solutions to see how the Elasticsearch Platform works, and how our solutions will fit your needs.

- Discover how to deliver generative AI in the Enterprise.

- Share this article with someone you know who'd enjoy reading it. Share it with them via email, LinkedIn, Twitter, or Facebook.

Generative AI FAQs

Does ChatGPT use Elasticsearch?

Elasticsearch securely provides access to data for ChatGPT to generate more relevant responses.

What are some examples of generative AI?

Examples of generative AI include ChatGPT, DALL-E, Google Bard, Midjourney, Adobe Firefly, and Stable Diffusion.

What's the difference between artificial intelligence and machine learning?

Artificial intelligence (AI) refers to the broad field of developing systems that can perform tasks that simulate human intelligence, while machine learning (ML) is a subset of AI that involves the use of complex algorithms and techniques that enable systems to learn from data, identify patterns, and improve performance without explicit instructions.

Generative AI glossary

Generative adversarial networks (GANs): GANs are a type of neural network architecture consisting of a generator and a discriminator that work in tandem to generate realistic and high-quality content.

Autoencoder: An autoencoder is a neural network architecture that learns to encode and decode data, often used for tasks like data compression and generation.

Recurrent neural networks (RNNs): RNNs are specialized neural networks for sequential data processing. They have a memory component that allows them to retain information from previous steps, making them suitable for tasks like text generation.

Large language models (LLMs): Large language models, including ChatGPT, are powerful generative AI models trained on vast amounts of textual data. They can generate human-like text based on given prompts.

Machine learning: Machine learning is a subset of AI that uses algorithms, models, and techniques to enable systems to learn from data and adapt without following explicit instructions.

Natural language processing: Natural language processing is a subfield of AI and computer science concerned with the interaction between computers and human language. It involves tasks such as text generation, sentiment analysis, and language translation.

Neural networks: Neural networks are algorithms inspired by the structure and function of the human brain. They consist of interconnected nodes, or neurons, that process and transmit information.

Semantic search: Semantic search is a search technique centered around understanding the meaning of a search query and the content being searched. It aims to provide more contextually relevant search results.

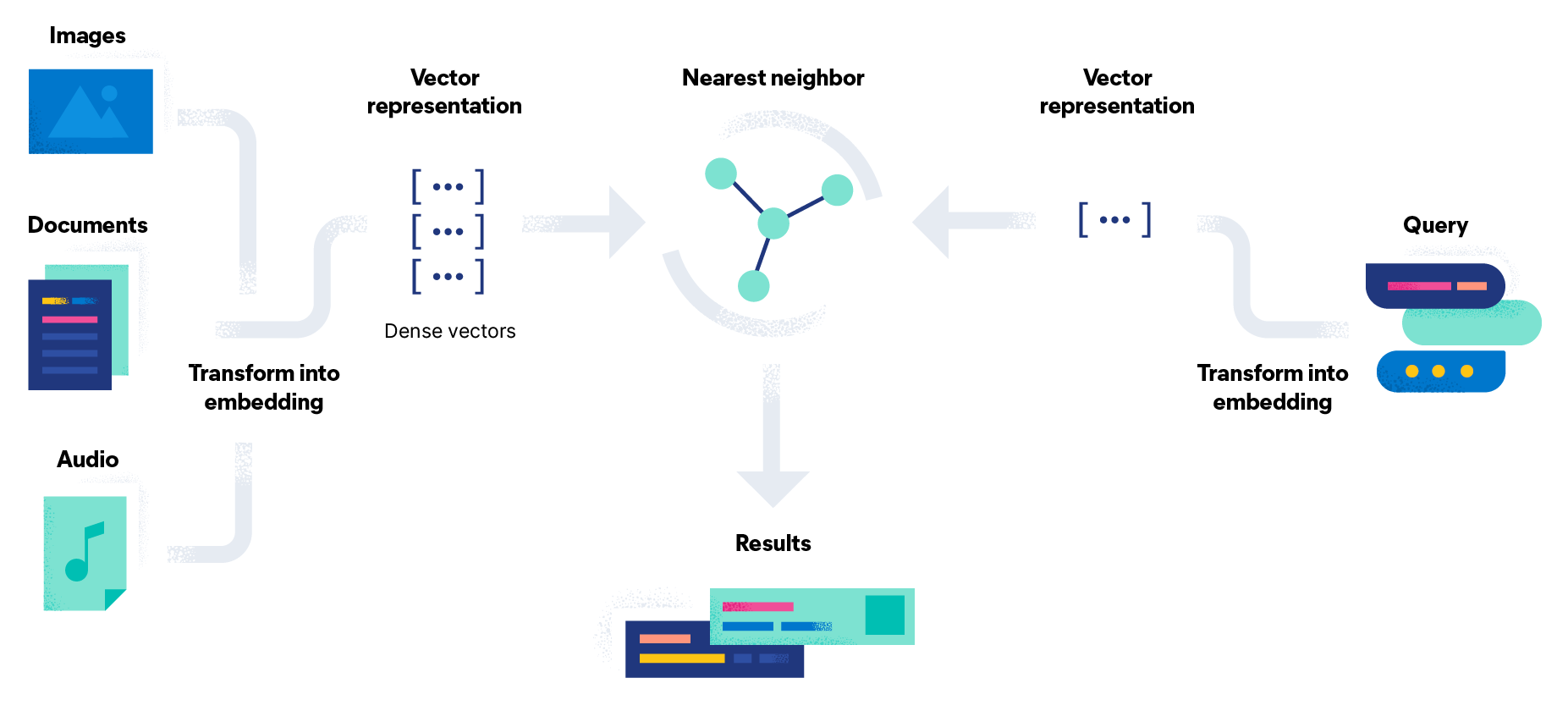

Vector search: Vector search is a technique that represents data points as vectors in a high-dimensional space. It enables efficient similarity search and recommendation systems by calculating distances between vectors.

Footnotes

1 "Artificial Intelligence-Based Data-Driven Strategy to Accelerate Research, Development, and Clinical Trials of COVID Vaccine," Biomed Res Int. 2022, by Ashwani Sharma, Tarun Virmani, Vipluv Pathak, Anjali Sharma, Kamla Pathak, Girish Kumar, and Devender Pathak, Published online July 6, 2022, Accessed June 27, 2023